On Wednesday the 28th of October the annual Google Cloud Summit took place in the Taets Gallery in Amsterdam. This was the opportunity to gather new ideas and discover the future of the cloud. With Infofarm we also start looking at the possibilities that the cloud has to offer us. So this was the moment to send someone on a mission. That someone would be me! For me this is the first time attending this kind of event.

When present I attended as much of machine learning and AI talks as possible. Despite the fact that I already knew quite a lot about the Google Cloud services, the talks were interesting enough to keep me motivated throughout the day. In total I’ve attended five talks.

Not all where equal interested, but the purpose was most certainly reached: prepare and motivate us for using new technologies concerning AI and machine learning in the cloud. To give you as reader a view of my experience, I made a short summary of the talks I found the most interesting.

Talk 1: Going Beyond the Traditional Enterprise Data Warehouse with BigQuery (Speaker: Robert Saxby, Google)

This was the first talk of the day. After a pretty long keynote, we could start with the real deal. The presentation itself tackled BigQuery, the serverless cloud-native data warehouse service of Google. First, we received a very short introduction on BigQuery. The speaker, I think, was under the impression that everybody already knew what BigQuery was.

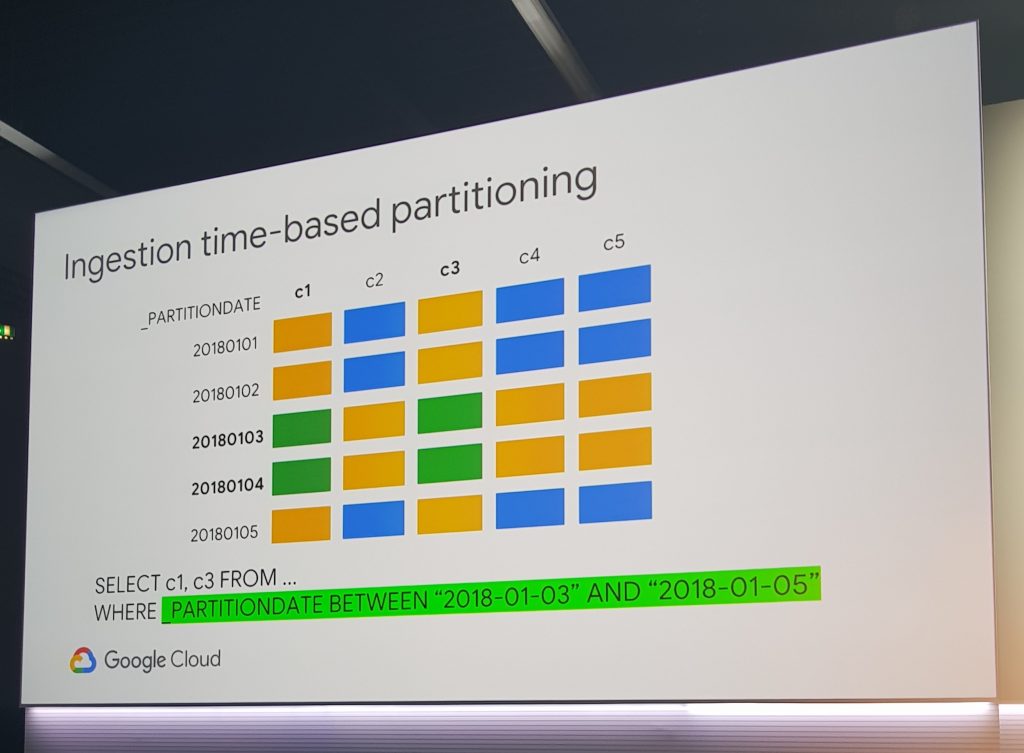

However, for me this was not the case. It became clear that this was going to be quite the technical talk. Robert discussed some architectural designs and an internal look into BigQuery. This was backed by some best practices for partitioning. In the demo the theory was put to practice. A complex query is submitted and processed a couple of terabytes in just a few seconds. It’s clear that this is a very powerful tool for processing a lot of data without having to worry about the infrastructure. Just deliver your data to BigQuery and let Google handle the rest of the work.

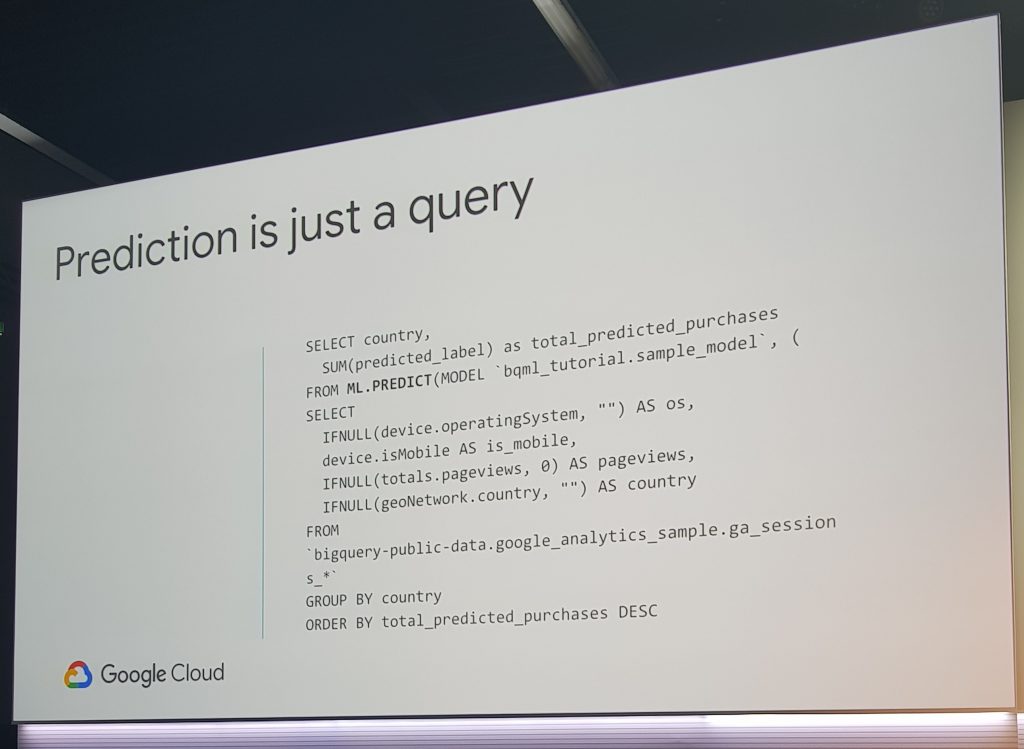

Of course, I’m also interested in some machine learning. At the end of the talk they quickly tackled BigQuery ML. This is a way to store models as BigQuery datasets. Creating and training the models can be done with queries, and you are only charged for the amount of data that is used.

Talk 3: Atos: How Cloud is fundamental for your AI (Speakers: Wim Los en Robin Zondag, Atos)

Besides speakers from Google, there were also a couple of partners. Some of these partners got their own slot where they shared their experiences and usages with Google Cloud. This could be very interesting for us to see how other big companies make use of Google’s Cloud services for their machine learning projects.

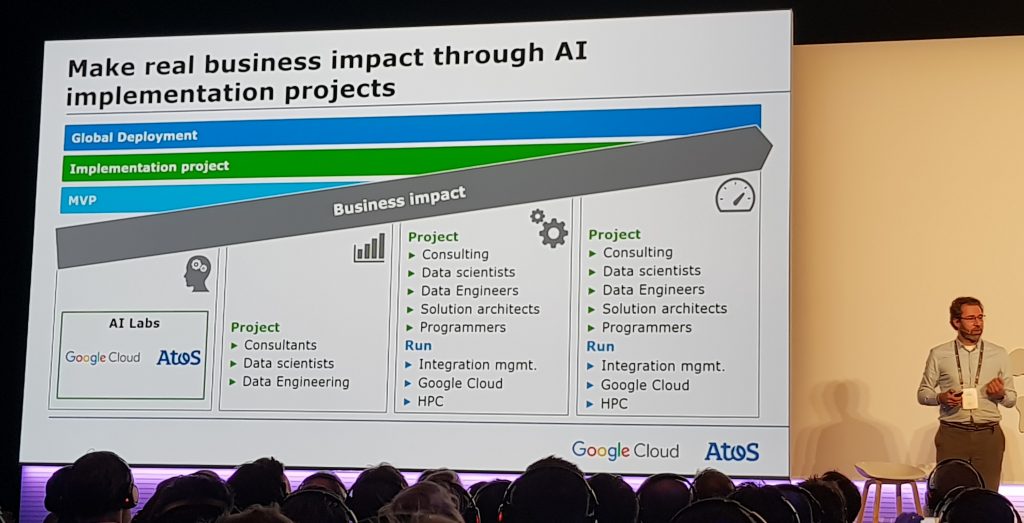

I’ve attended the talk of Wim Los (Senior Vice President Google Alliance) en Robin Zondag (Global Head of Atos AI Labs) from Atos. Atos is a French IT-company and is a global leader in digital transformation. With the use of the cloud it provides end-to-end Orchestrated Hybrid Cloud, Big Data, Business Applications and Digital Workplace solutions.

Atos was proud to announce that they opened their first AI Lab in the UK. The ‘Atos AI-Lab’ is the outcome of the partnership between Google and Atos. The lab is offering experiments/solutions for both public and private companies on artificial intelligence. In this lab they need a standard way for working. This was tackled in the first part of the presentation. Explaining on how a they handle the perfect AI project and how the perfect team for this job looks like.

In the second part of the presentation they covered where the services and technologies of Google Cloud fits in this process.

It is clear that for machine learning projects Google Cloud can offer a big value for your business. After hearing their success stories, I conclude that with Infofarm we can’t fall behind on this! We should shift our expertise’s more to the cloud, so that we can keep offering the most innovative solutions for our clients. And hopefully we will be standing on that stage someday.

Talk 4: Hassle-free Machine Learning for Business Workshop (Speaker: Hussein Mehanna, Google)

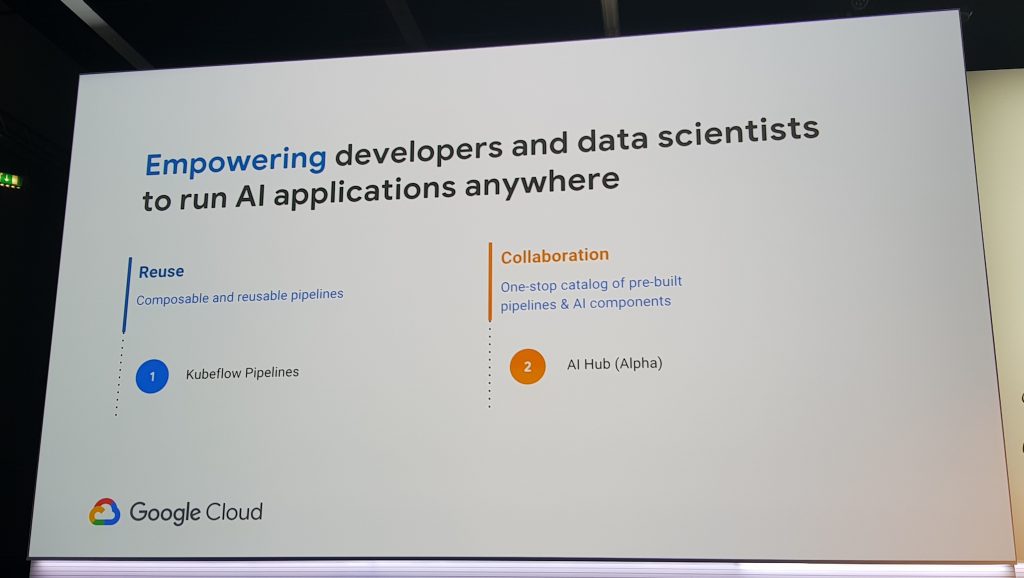

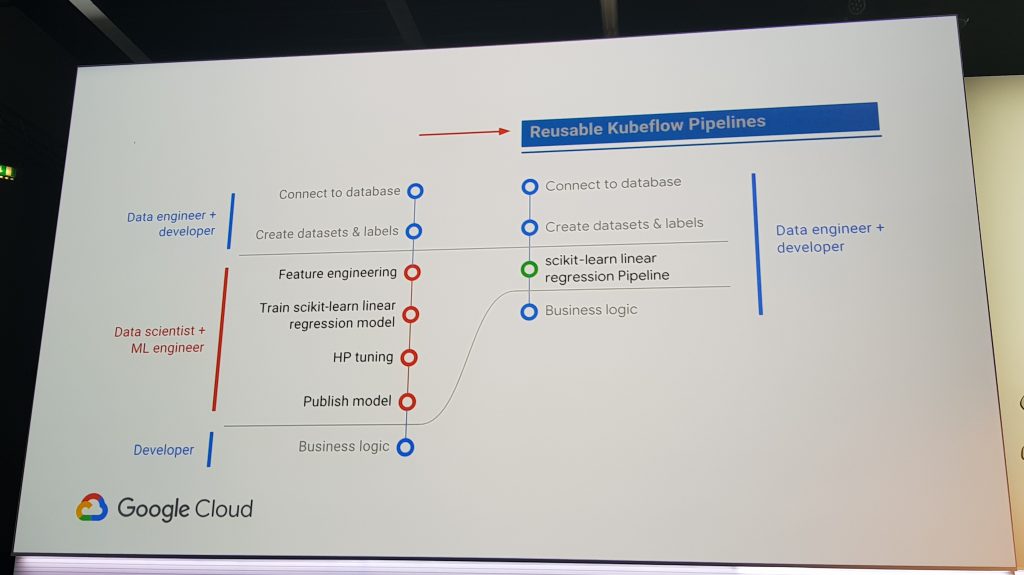

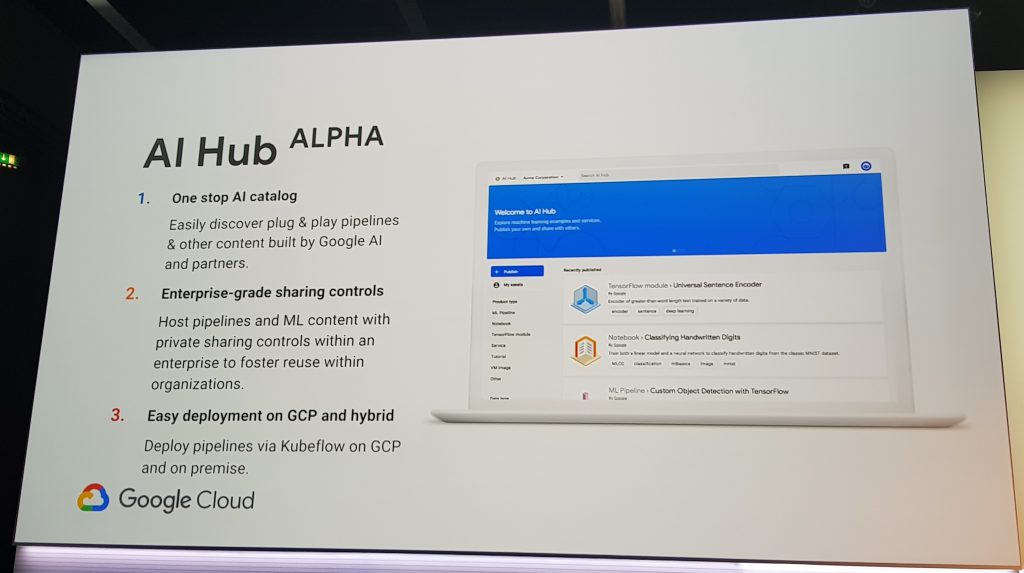

I still feel that there is a big gap between data scientists, engineers and developers for AI projects. It would be nice if Google Cloud offered some kind of solution for this. And luckily there is. This talk introduced us to Kubeflow Pipelines and AI Hub. Personally, this was the most interesting talk for me.

Because both services are fairly new we got a high-level overview rather than a deep dive.

It was divided into two parts. Because it was new for me I think it is maybe new for you to, so I made a short summary:

Part I: Kubeflow Pipelines

Kubeflow Pipelines is the new component of Kubeflow, the popular open source project of Google (which I assume is well-known). With Kubeflow Pipelines you have a tool to build, manage and deploy machine learning workflows. By creating these pipelines, you are able to reuse parts or the entire pipeline for different applications. This also enables you to experiment with different kind of users on different algorithms in a safe and fast way. So, you can choose the best algorithm for your application. It is also possible to extract the current ML-model from the pipeline, let a scientist adapt it and re-train it, and plug it back in without impacting other parts of the pipeline.

Part II: AI Hub

The AI Hub is a catalog with AI components like pre-build pipelines, notebooks, TensorFlow-modules and more. The hub distinguishes both a private and public catalog. In the public catalog users can discover the latest AI content from Google and its partners. For sharing content that is created from you within own organization you can use the private catalog. Again, this enables a better collaboration between different profiles in your organization.

Both are still in alpha and beta. We will keep an eye on both services for availability and new developments. When fully available we will start experimenting so that we can offer these kinds of solutions to our customers.

With a total of five talks attended we come to the end of the summit in the Taets Gallery. I discovered some new technologies and services from Google. I shared some thoughts and ideas with other professionals in the industry. It’s clear that the cloud can offer us a lot more than we think, and that it is a path we should really pursue.

Google Cloud itself is working on a very ambitious progression in the AI and machine learning field. With services like AutoML, Kubeflow Pipelines and the AI Hub, Google is no longer topped by other big cloud providers like Azure or the dominant AWS.

With this in mind I conclude that we can’t fall behind with Infofarm. I will share my new knowledge and enthusiasm with my team so that we can start exploring new possibilities with Google Cloud. We are also looking at a first use case fully developed with Google Cloud.

More about this later, we will keep you posted!

To be continued…

Data is gold and infofarm wants to make this gold tangible. Infofarm wants to support their customers in processing their data by providing targeted analyses, forecasts and recommendations. Their passionate and talented team of data scientists, data developers and project managers, provides together with the latest technologies, significant added value to their projects, each other and their customers.

www.infofarm.be

Author: Vincent Huysman, 7 jan 2019